Jaakko Väyrynen

Currently I'm working in Utopia Analytics Oy.

[ GROUP, CV, PUBS, BLOG, SMTDEMO, WSB ]

Contact Information

- Email:

- jaakko.j.vayrynen@aalto.fi

- Skype:

- jaakkovayrynen

Publications

See my publications page. See also Google Scholar.

Research Interests

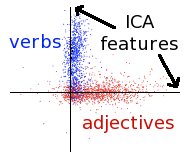

Independent component analysis for understanding linguistic elements

Independent component analysis (ICA) is a computational method for learning the underlying mixture components from several observed mixtures. Words in text can be represented as mixtures of nearby words. Therefore, ICA and other machine learning methods can be useful in creating models for words and other linguistic elements in an unsupervised fashion. I'm interested in finding ways to encode different kinds of linguistic information, such as synonyms and other relations between words as well as syntactic or semantic information. Comparison between the learned structures and manually constructed linguistic knowledge is also a challenge.

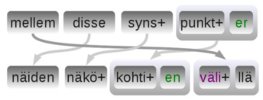

Statistical machine translation with unsupervised morpheme discovery

Statistical machine translation (SMT) is about creating and applying probabilistic and statistical models for making translation from one language to another better. The models are trained on translation examples of long text segments and the task is to create a method that can generalize outside the training examples. I'm interested in fully automatic SMT in which there is no human participation in the translation nor in the model building. Especially, we are considering unsupervised morpheme discovery for more accurate and larger coverage matching of translation equivalents. This is important when there is little training examples or the language is morphologically complex.

Word spaces for encoding information

A word space represents words in a vector space which has been constructed using information of word occurrences. I'm interested in learning how different kinds of information can be encoded in a vector space by changing the way how word occurrences are counted. Moreover, a single vector space represents each word as a single point in the space. This radically simplifies the ambiguous and complex nature of natural language as the relationship between any two words is reduced to a single distance measure between them. I believe that modeling each word as a cloud of points instead of a single point and combining word spaces that encode different relations will help create much more rich and powerful word space models. Furthermore, encoding multiple languages in the same word space provides an interesting tool for machine translation.

Information theoretic methods for automatic evaluation of translations

Development of statistical models for machine translation is an empirical approach that frequently requires an analysis of the quality of the created translations. Human translation by professionals is the optimal solution in terms of quality, but is typically reserved only for the final evaluation of the method because of its high cost and slowness. There is a multitude of methods for the automatic evaluation of translation quality which can be utilized in the model development. However, most of the methods are heuristic and consider only a small portion of all available information. I'm interested in using compression as an approximation to algorithmic information theory and applying it to the evaluation of translation quality. Its benefits include a clear theoretical model and the ability to model language as a sequence of characters, as opposed to many other methods which only consider word sequences. The method works by comparing the lengths of two outputs: 1) the compared string first compressed independently and then concatenated, and 2) the compared string first combined and the compressed together.

Modeling the statistical structure of images

Models based on maximization of sparseness in images or temporal coherence in image sequences leads to the emergence of principal simple cell properties in biological visual systems. Taking dependencies between linear filter outputs permits the modeling of complex cells and topographic organization as well. Independent component analysis is one of the computational methods that can be used to maximize sparseness of linear filter outputs. A combination of the three statistical properties finds filter activations which are contiguous both in space and in time and the filters are topographically ordered.

Missing values with the self-organizing map

Missing values and huge data matrices create a computational problem that needs to addressed. I have enabled the SOM toolbox to handle large matrices with lots of missing values under certain assumptions. This facilitates, for instance, training a map based on the full Netflix data, which creates a map of the movies and the users. A map of the rating distribution is also interesting even though the data is compressed and dense.

Teaching

- T-61.5020 Statistical modeling of natural language, Spring 2008-2012

- T-61.6090 Special course in language technology, Autumn 2005

- T-93.210 Basic course in programming T1, Autumn 2000-2001 and Spring 2002

Links

- Computational Cognitive Systems group

- Cognitive Systems blog

- Experimental statistical machine translation demo

I also have a personal page.